Artificial intelligence (AI) is now top of mind in corporate boardrooms and executive offices. From traditional machine learning (ML) to generative AI and emerging agent-based approaches, AI is widely expected to transform how enterprises operate and compete.

At the same time, expectations are outpacing results. A 2024 study by the RAND Corporation reported that approximately 80% of AI projects fail, citing multiple root causes, including the lack of “the necessary data to adequately train an effective AI model.”[1]

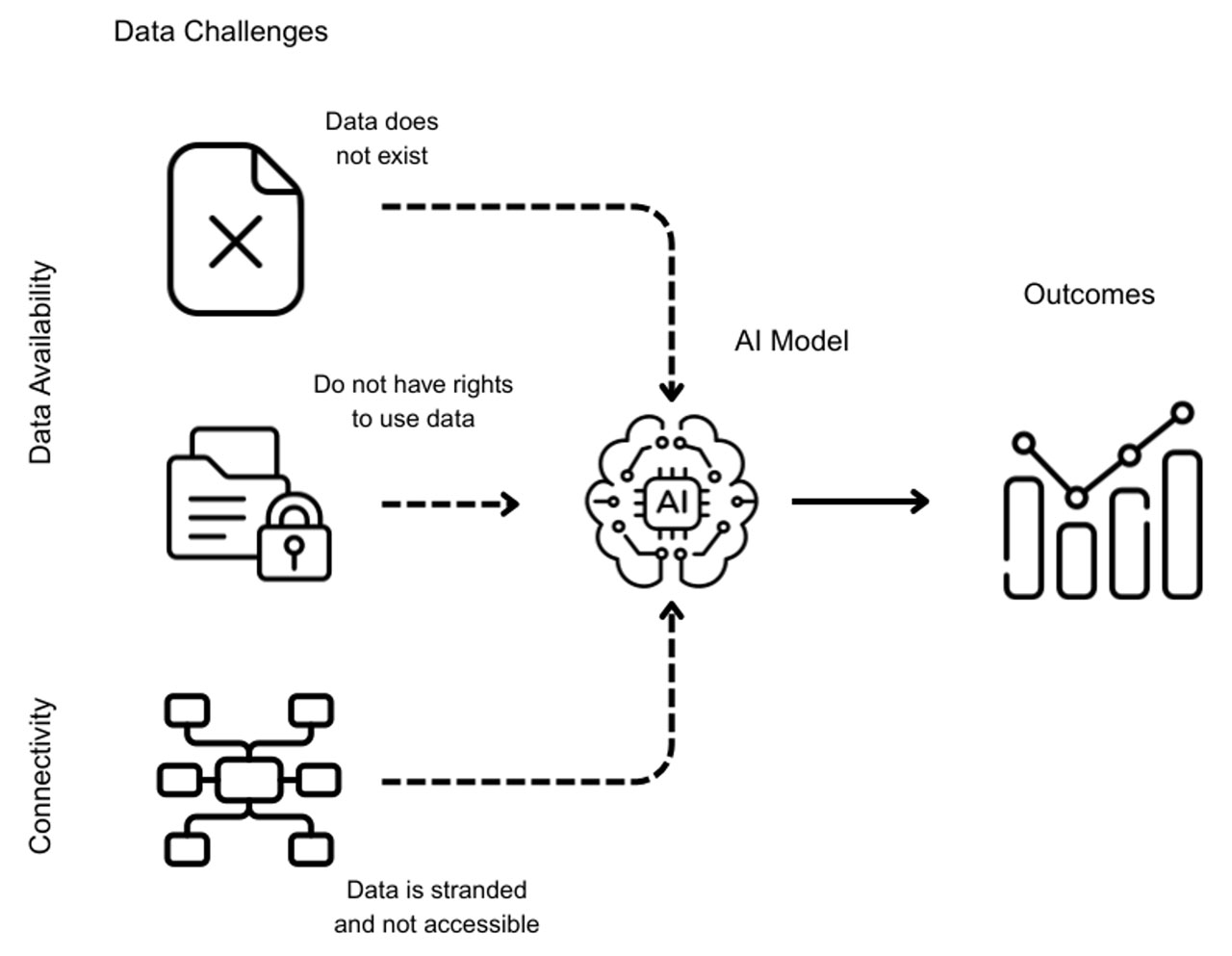

This finding highlights a foundational challenge many enterprises underestimate: getting the right data, from the right places, to support AI-enabled systems and operations. The challenge manifests in two forms - availability (does the data exist?) and connectivity (can the data be accessed and shared?). Without this foundation, AI initiatives stall, underdeliver, or fail to scale.

As a fractional Chief AI Officer, I see this pattern repeatedly. The issue is not a lack of ambition or investment in AI, but insufficient focus on enabling the data foundation and infrastructure required to support it. This challenge is complex, crosses organizational boundaries, and requires executive-level attention. Organizations that delay addressing these foundational issues often find that competitors who move first are able to scale AI faster, lock in operational advantages, and raise performance expectations in ways that are difficult to catch up to.

This blog explores why data and connectivity are critical to enterprise AI success and outlines practical steps leaders should consider. This is the first in a series of articles examining what it means for enterprises to be future-ready in an increasingly AI-enabled world.

[1] J. Ryseff, B. De Bruhl, and S. Newberry. “The Root Causes of Failure for Artificial Intelligence Projects and How They Can Succeed,” Research Report, Rand Corporation. August 13, 2024.

AI Is a Key Enterprise Capability

AI is already embedded across many enterprise functions. Machine learning is commonly used to detect fraud, optimize operations, predict maintenance needs, and improve product quality. Natural language processing supports customer and technical support operations at scale.

Generative AI has expanded these capabilities further by combining sensor data, operational logs, and human inputs to diagnose anomalies, plan optimization strategies, and recommend actions. Emerging agentic AI approaches aim to move beyond recommendations to orchestrating and executing operational workflows.

Data and Connectivity Is a Strategic Enabler for AI

An enterprise’s AI capabilities depend on something far more basic than advanced models: reliable availability of, and access to, operational data and connected systems. AI systems need consistent access to the right data to train and retrain models. Once deployed, that same data provides the real-world signals AI systems interpret and act upon.

Connectivity is what makes this possible at enterprise scale. It enables data to be accessed, shared, and integrated across systems, environments, and AI applications. Without connectivity, data remains fragmented, delayed, or inaccessible. This limits AI’s ability to support decisions, automation, and execution.

In practice, obtaining the data AI needs is a long-standing challenge. Enterprise data is distributed across internal applications, operational systems, and a wide range of physical assets (equipment and devices) spread across multiple facilities and geographies. Many of these assets and systems operate in standalone environments or proprietary legacy networks outside traditional IT domains.

The consequences of poor data and connectivity include but not limited to:

- AI pilots fail to move beyond proofs of concept because critical operational data is missing, inaccessible, or unreliable at scale

- Automation and agent-based initiatives stall when AI-generated insights cannot be acted upon due to unconnected or unmanaged systems

- AI-driven decisions, based on incomplete data increase the risk of unsafe and unreliable outcomes that puts the enterprise at high operational, financial and legal peril

- “Tried and true” AI use cases (e.g. predictive maintenance, anomaly detection, and operations optimization) underperform without real-time visibility into assets

- AI investments struggle to demonstrate ROI because foundational data and connectivity issues were never addressed upfront

Data Challenges for AI Fall into Three Categories

An enterprise’s AI data challenges appear in two basic forms: availability of the data and connectivity of the systems to access and communicate the data. While data is the raw input AI depends on, connectivity makes it accessible, timely, and usable.

Within these two forms, the AI data challenges generally fall into three categories:

- The data does not exist. This is a challenge about availability. Enterprise assets may not be instrumented with the sensors to collect the data AI models require. These “dark assets” are common in many industrial environments. In other cases, data may be available but lacks the contextual information (metadata) needed to make it usable by AI. A 2025 AI readiness survey found that 54% of the 272 respondents reported “data quality and availability” as their top challenge in adopting AI in an industrial environment.[2]

- The data is stranded and not easily accessed. This is a challenge about connectivity. Legacy assets in industrial and manufacturing facilities were designed for a pre-connectivity environment. Data remains trapped on the asset itself or within separate proprietary operations technology (OT) networks. Other data resides in enterprise applications (e.g., ERP, MES, CMMS, LIMS, BMS) that use different schemas and standards, making integration and communication difficult. Integration with legacy systems and data silos is the second largest challenge, reported by 48% of 272 respondents, in a 2025 AI readiness survey.[3] Beyond data accessibility, connectivity takes on a renewed sense of importance and urgency as organizations move toward automation and agent-driven execution. Disconnected systems are no longer tolerable inefficiencies as they become hard blockers to action.

- The data is constrained by rights and governance. This is a challenge about availability. Some data is owned or controlled by third parties such as OEMs, suppliers, or partners. In other cases, cybersecurity, safety, or regulatory requirements limit how and where data can be used.

AI data challenges are not uniform. Different strategies and tactics are required to fully address the challenges. There is no “one size fits all” approach that works for everything.

[2] “Accelerating AI use cases in 2026: The industrial data, intelligence and AI readiness survey report”, HiveMQ report, 2025. The 2026 Industrial Data, Intelligence & AI Readiness Survey was performed by IIoT World and gathered insights from 272 professionals across manufacturing, energy, logistics, transportation, smart cities, healthcare, and other industrial sectors.

[3] ibid.

These Challenges Require Strategic and Executive Attention

AI is no longer a technology initiative, but a set of business and operational initiatives that affect revenue growth, cost structure, customer experience, and enterprise risk. When AI initiatives fail or underperform, the consequences extend beyond missed technical milestones to include delayed returns on significant investments, increased operating costs from manual workarounds, and heightened financial, reputational, or safety exposure.

Given the scale of these implications, AI data and connectivity challenges cannot be treated as isolated IT or operational issues. They represent a strategic business priority that requires executive ownership, clear accountability, and deliberate investment decisions. Until these issues are elevated to the executive agenda and treated as shared enterprise responsibilities, AI teams will continue to be held accountable for outcomes they cannot fully control.

What makes these challenges particularly complex is their enterprise-wide nature. The challenge is not that data and connectivity problems are new, but that as AI becomes embedded in decision-making and execution, gaps that were once manageable now directly constrain enterprise performance and increase risk. Addressing them often requires coordinated investment across IT, operations, engineering, security, legal, and external partners to modernize data access, connectivity, and governance in a sustainable way. Piecemeal funding through individual projects may deliver short-term progress, but it rarely creates the durable foundation AI demands and often increases long-term cost and complexity. Lasting improvement comes from treating data and connectivity as shared enterprise assets, backed by executive sponsorship, aligned incentives, and a roadmap that balances near-term wins with long-term scalability.

Five Next Steps for Enterprise Leaders to Consider

Addressing AI data and connectivity challenges requires deliberate attention and leadership. Executives and leaders should consider the following next steps:

- Re-examine your active AI initiatives through a data and connectivity lens. Review current AI projects and pilots and identify those where the lack of data and data accessibility and connectivity is hindering progress and performance.

- Identify where critical operational data is isolated or stranded. Conduct a focused review of key operational sites to find assets, devices, or systems that operate outside the core IT environment. Inventory what is instrumented, what is connected, where data is manually extracted, and where ownership or access rights are unclear. Prioritize sites where AI is planned or already in use.

- Make data and connectivity explicit pillars of your AI strategy. Update your AI strategy and roadmaps to clearly state how data availability and connectivity will be addressed. Treat these foundations as board-level, multi-year priorities required to scale AI across the enterprise.

- Assign clear executive ownership. Designate accountable executive leadership for enterprise data availability and connectivity across IT, operations, and business units. Ensure this role has authority, visibility, and budget influence.

- Incorporate data and connectivity considerations in your enterprise risk management plan. Assess how gaps in data and connectivity increase exposure across cybersecurity, safety, compliance, and business continuity.

Closing Thoughts and Final Guidance

As AI becomes embedded in core operations, weaknesses in data availability and system connectivity increasingly determine outcomes. These challenges cannot be solved through isolated technical fixes or delegated ownership. They require executive attention, clear accountability, and deliberate investment in the data and connectivity foundations AI depends on.

Before approving the next AI initiative, leaders should ask a simple question: are the systems that generate critical operational data truly accessible, connected, and governable at enterprise scale? Organizations that act decisively now will be better positioned to translate AI ambition into sustained business value and build a more resilient, future-ready enterprise.

If your AI initiatives depend on operational data, remote assets, or legacy infrastructure, don’t let it fail. Contact us to learn how Digi Infrastructure Management Solutions provide the connectivity, visibility, and control layer that makes AI possible.

FAQ

Why is there so much legacy unconnected equipment in many industrial sites and operations facilities?

Most industrial and operating facilities were built over a number of decades, with many pre-dating the modern “IT connected” environment. These operating environments were designed to carry out specific functions, be reliable and safe. Industrial assets were designed for long equipment lifecycles, but not for data sharing or enterprise connectivity. Production and industrial assets often operate for decades, and replacing them simply to gain connectivity rarely makes economic sense. As a result, many machines, industrial control systems, and field devices still run on proprietary protocols, isolated and proprietary networks, or older architectures that were never intended to integrate with modern IT systems. These environments were built to keep operations running, not to feed data into analytics or AI platforms.

Over time, these operating environments became a patchwork of connected and unconnected systems. While some newer assets and equipment may support modern networking and remote monitoring, a significant portion of operational technology remains difficult to access, integrate, or manage centrally. This “connectivity gap” is the natural outcome of long asset lifecycles, incremental upgrades, and the historical separation between operational technology and enterprise IT.

Can’t we just replace legacy equipment with modern connected systems?

In most industrial environments, wholesale replacement of legacy equipment is neither practical nor economically viable. Many operational assets are designed to run for decades, and as long as they work as intended, are safe, reliable, and meet production requirements, replacing them purely for connectivity reasons is difficult to justify. The capital cost, operational disruption, downtime risk, and retraining requirements associated with large-scale equipment replacement often far outweigh the immediate benefits.

While many sites embark in modernization, they do so in phases over a number of years, focusing first on extending the useful life and visibility of existing assets rather than replacing them outright. For example, connecting legacy equipment to the IT network or to each other is one strategy that is more realistic and offers lower risks. By enabling secure access to data and systems that are already in place, many enterprises begin improving visibility, analytics, and operational decision-making without disrupting production. This approach allows companies to layer in new capabilities, inform future capital planning with better data, and modernize more strategically.

How do unconnected or legacy systems actually affect AI initiatives?

AI systems depend on timely, reliable data from across the enterprise to generate accurate insights and support decision-making. When important operational assets and systems are not connected, the data AI relies on becomes incomplete, delayed, or inconsistent. This limits model performance, increases the need for manual data preparation, and reduces confidence in AI-driven outputs. In many cases, AI initiatives stall not because the models are ineffective, but because they cannot access the full picture of what is happening in real operations.

As organizations move from using AI for analytics and insights, toward automation and more autonomous decision-making, the impact becomes even more significant. AI systems that cannot reliably interact with operational environments remain advisory or insight tools rather than drivers of action and execution. Unconnected systems create blind spots that hinder both insight and execution, making data and connectivity foundational to realizing real business and operational value from AI.

Next Steps

About the Author

Benson Chan is the COO at Strategy of Things, a Silicon Valley-based firm that helps government and enterprises innovate with artificial intelligence and the Internet of Things. He has over 30 years of experience working with innovative technologies across Fortune 500, start-up companies and government organizations. He served as the Chair of the NIST IoT Advisory Board advising the federal government on the Internet of Things, the co-chair of the CompTIA and GTIA IoT Industry Advisor, and an industry mentor in the US Department of Energy Building Technology Office’s IMPEL program that paired industry with National Laboratories researchers to foster entrepreneurial thinking.

Benson Chan is the COO at Strategy of Things, a Silicon Valley-based firm that helps government and enterprises innovate with artificial intelligence and the Internet of Things. He has over 30 years of experience working with innovative technologies across Fortune 500, start-up companies and government organizations. He served as the Chair of the NIST IoT Advisory Board advising the federal government on the Internet of Things, the co-chair of the CompTIA and GTIA IoT Industry Advisor, and an industry mentor in the US Department of Energy Building Technology Office’s IMPEL program that paired industry with National Laboratories researchers to foster entrepreneurial thinking.